1st place in 2023 Samsung AI Challenge.

My team (Keondo and me) took 1st place (out of 212 teams) in Samsung AI Challenge (camera-invariant domain adaptation). 😄

Competition on Developing Domain Adaptive Semantic Segmentation Algorithms for Autonomous Driving

(Prize: 10 million KRW)

[Problem]

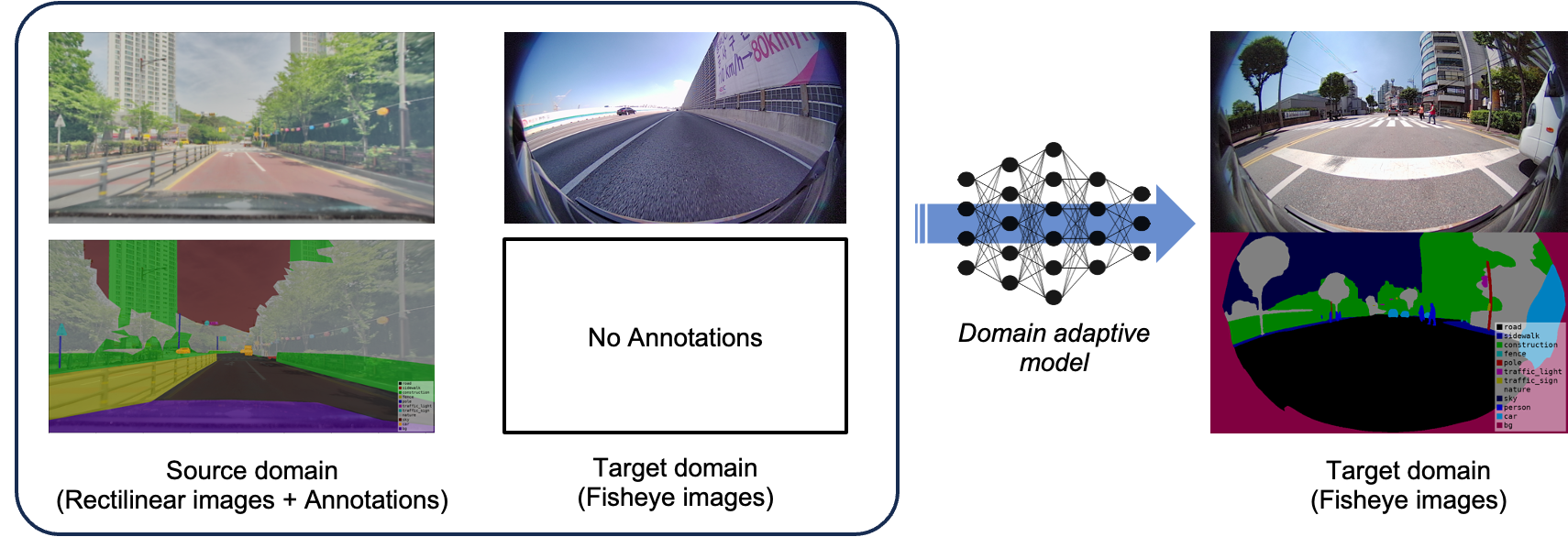

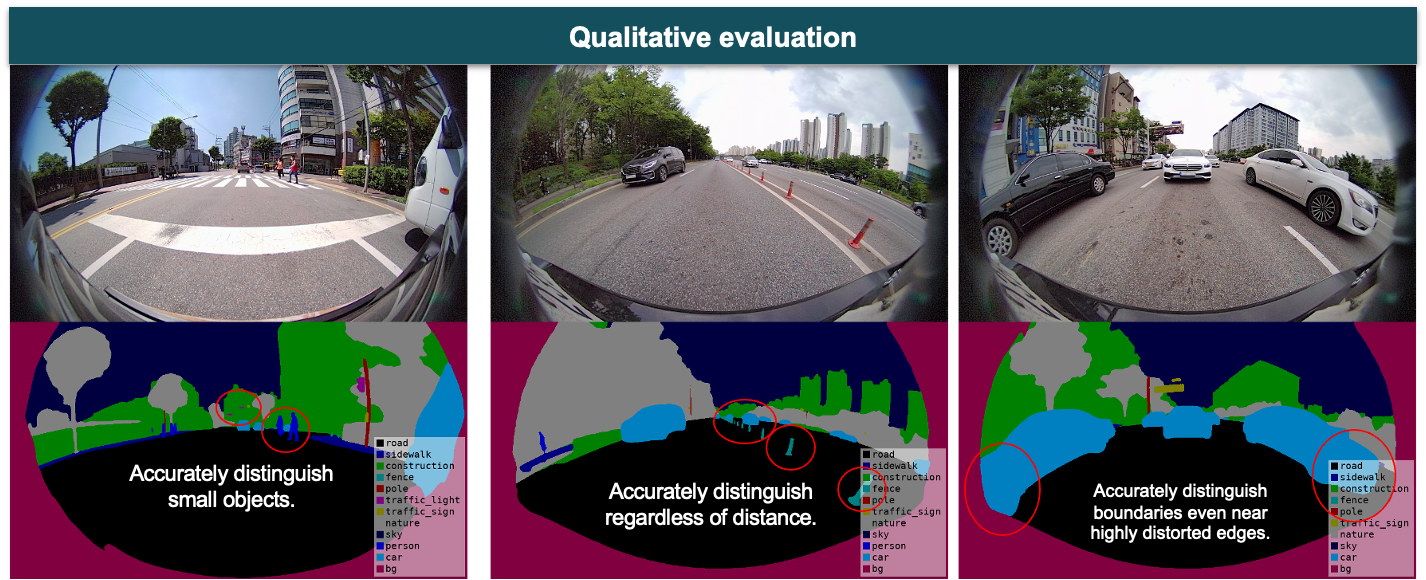

Autonomous driving leverages various sensors to perceive the surrounding environment and control the vehicle accordingly. In the case of camera sensors, differences between images (Domain Gap) occur depending on factors like the installation position, type of sensor, and driving environment. Previous studies have widely applied Unsupervised Domain Adaptation techniques to overcome recognition performance degradation due to discrepancies in photometry and texture between images. However, most existing research does not consider the geometric distortions caused by the optical properties of cameras. Therefore, in this competition, we propose the development of AI algorithms that perform high-performance Semantic Segmentation on distorted images (Target Domain: *Fisheye) by utilizing non-distorted images (Source Domain) and their labels.

- *Fisheye: Images captured with a fisheye lens that has a 200° Field of View (200° F.O.V)

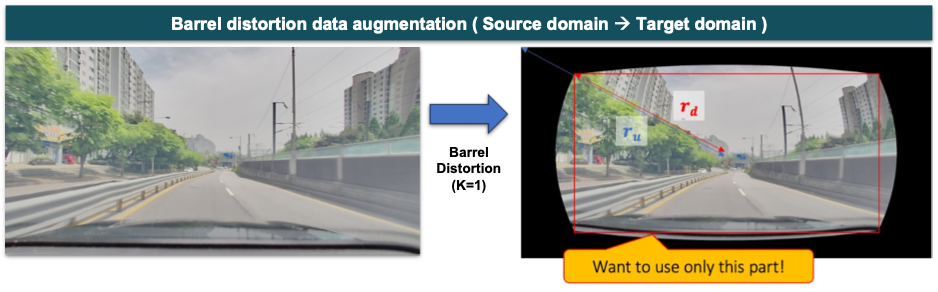

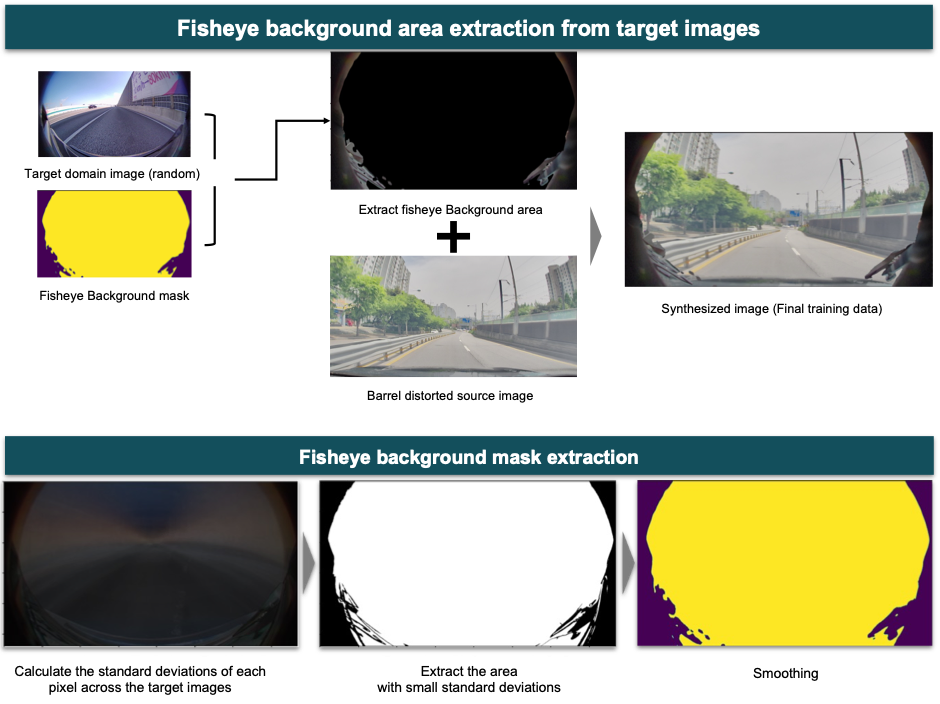

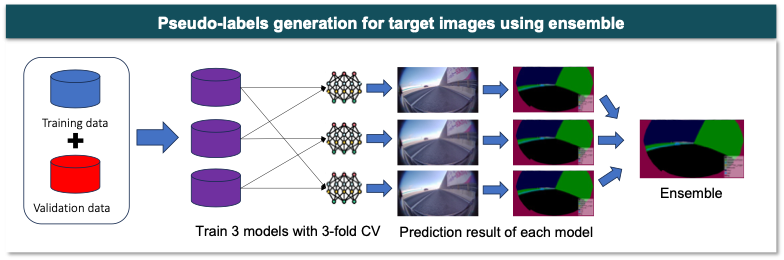

[Our solution]

[Results]

(Public score: mIoU 0.67502 , Private score: mIoU 0.67711)

[References]

[1] M. Cordts et al, “The Cityscapes Dataset for Semantic Urban Scene Understanding,” in Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

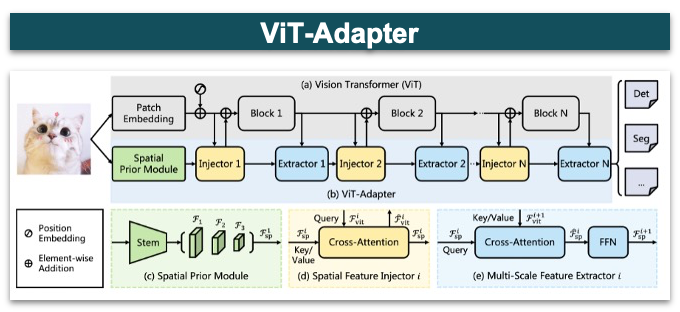

[2] https://github.com/czczup/ViT-Adapter