MRViz

MRViz: Visualization System for Analyzing Reliability of CNN-based Deep Learning Model

2021.03 - 2022.02

[Key Concept]

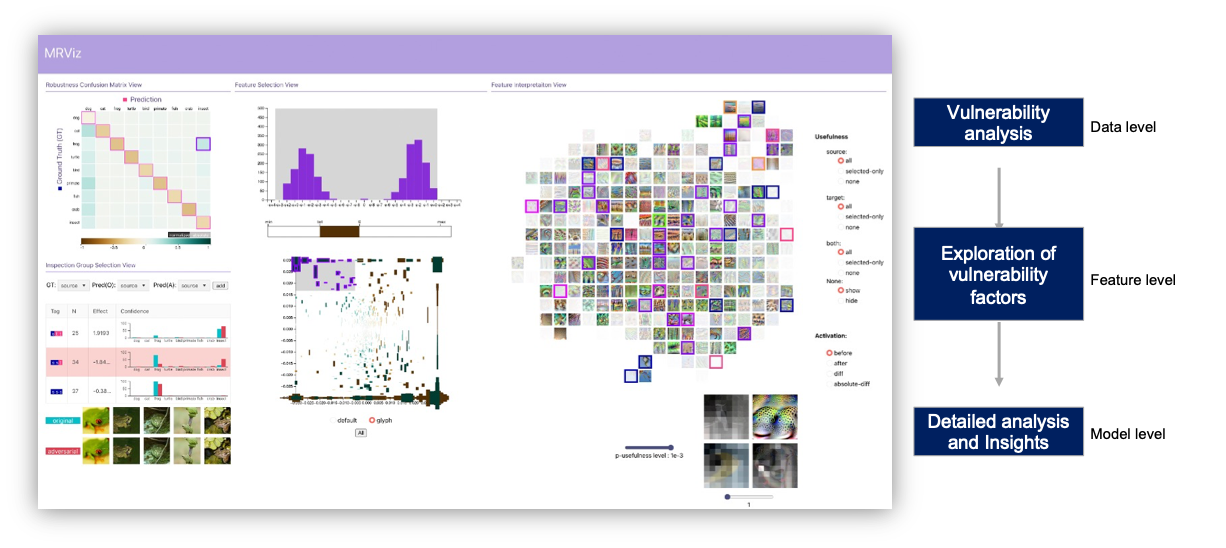

With the emergence of Convolutional Neural Network (CNN), artificial intelligence-based applications have established a significant part of our daily lives. However, since general deep learning models are black-box, it is difficult to respond to vulnerabilities such as performance degradation caused by adversarial attacks. Model reliability threats can be catastrophic in safety-critical scenarios, such as cars’ autonomous driving. Therefore, it is necessary to identify the cause of the threat and improve it to operate in the direction desired by humans. However, it is challenging to analyze deep learning models built without the intervention of human cognitive processes. We present MRViz, a visualization and interaction tool that can detect model reliability threats and derive improvement plans by analyzing their causes. With MRViz, users 1) discover the reliability threat in deep learning models by using the vulnerability to adversarial attack as a clue, and 2) utilize novel feature-based interpretability metrics to determine the difference between the human cognitive process and the model decision-making process. For evaluating the usability of MRViz, we conducted a case study, and we found valuable insights needed to improve the reliability threats of CNN-based deep learning models.